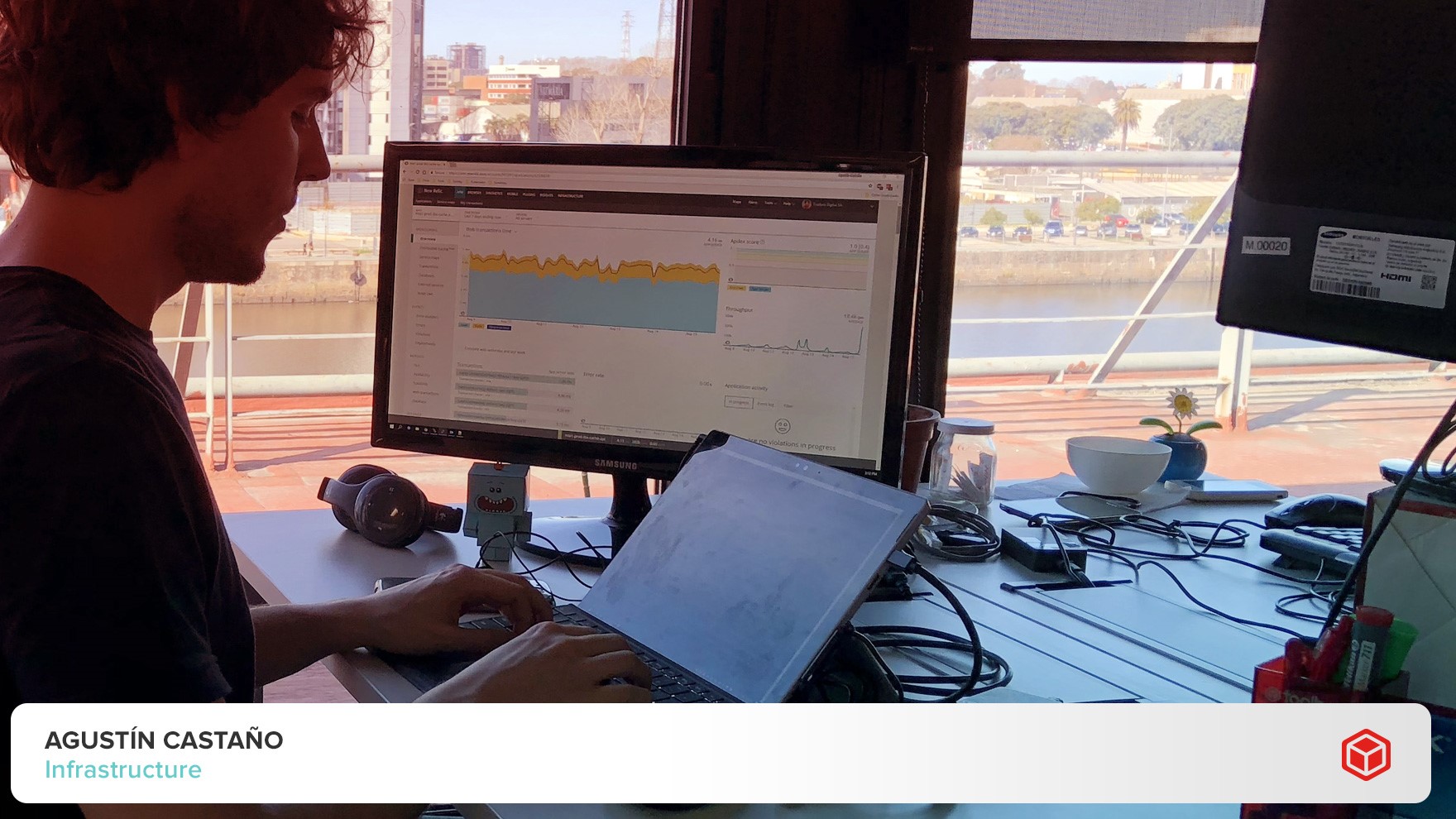

By Agustin Castaño (Infrastructure)

Launching a new service to the market is a huge challenge that goes through several stages such as strategic planning, architecture, development, design, and deployment, among others. During this process, the stress test is necessary, which is testing the operation of the service in similar conditions to those of the potential real use.

The tools for the stress test allow testing portions of the Toolbox platform through a series of requirements that simulate actions performed by users, with a similar or higher workload volume to the one to be faced in real conditions. This type of tests allows estimating how much a determined configuration of the infrastructure or executed APIs can support, locating bottlenecks, understanding the most required services and whether there’s a way to scale when the current response capacity exceeds.

Not only is it useful during the stages prior to production, but it also allows the improvement of the resolution of issues of our products, making them stand out more and more for their constantly updated technology. In this sense, stress tests honor, in some way, the DevOps culture, since the responsible areas of operations and development must execute them collaboratively.

The tools we use in Toolbox for the stress test include:

- Tsung: It is a multi-protocol and distributed, open code tool for the load test. It can be used to stress HTTP, WebDAV, SOAP, PostgreSQL, MySQL, LDAP, MQTT, and Jabber/XMPP servers. Through a script (a piece of programming code) written in XML, indicating the tool what to do (the instructions of what the user does when using that service, for the tool to imitate it) is possible, as well as scaling the number of users to thousands, or hundreds of thousands.

- Apache JMeter: Is an open code software, 100% Java, that, similar to the previous one, allows performing a load test, in order to measure the behavior or performance. Although it was originally designed for Web applications, its field of action expanded (today it includes SOAP/REST Web services, FTP, database via JDBC, message-oriented middleware through JMS, mail, and Java objects, among other test scenarios). It includes an interface that allows building the script to be used to attack the app that will be tested.

- New Relic: It is not a testing tool, but an APM tool (Application Performance Monitoring), and the one we use to monitor the systems in Toolbox. Although the previous two offer reports and graphs of how the tests are running and the state of the nodes, the holistic view that New Relic provides allows us to understand how the system is working, how much of the response time corresponds to the accesses to the database, how much of the time corresponds to the execution of the code, or how much corresponds to calls to services of third parties, which are some of its functionalities.

Of course, we don’t only take New Relic results into consideration. Tsung metrics, for example, allow us to know if the test is running correctly, that there are no design errors in the test, or what is called back pressure, which is when the system is not stressed because it simply stops attending the requests of the test tool to remain stable.

Who has taken my response time?

On a daily basis, thousands of users/subscribers access our clients’ platforms (content providers and TV operators). One of our services allows that page to be quickly built through a single request (in practice, all the information of the processed page is provided, and in this way, searching for the parts of the page in different requests is not necessary). This development belongs to our Cloud Experience product.

When testing this service, we noticed that the response time was high, in the order of one second. So, we generated a stress test for this particular service. In this case, we use Tsung. We designed the script in XML to simulate user behavior and scaled to 100,000 simulated users per minute.

Through the New Relic results, we found a high consumption of the database; not only was it higher than expected, but it was also a lot more frequent. After an analysis, carried out jointly by the Architecture and Infrastructure areas, we found information necessary for the construction of the platform required at all times (for example, the data of the sporting event that the subscriber wanted to see), but that information was not previously processed in a cache.

Once the cache was created and the extraction of this information from the cache instead of from the database was configured, the response times were halved. We ran the test again, which now showed results within the expected margins, and the service went into production.